MOIRAI-MOE: Upgrading MOIRAI with Mixture-of-Experts for Enhanced Forecasting | by Nikos Kafritsas | Nov, 2024

[ad_1]

The race to build the Top foundation forecasting model is on!

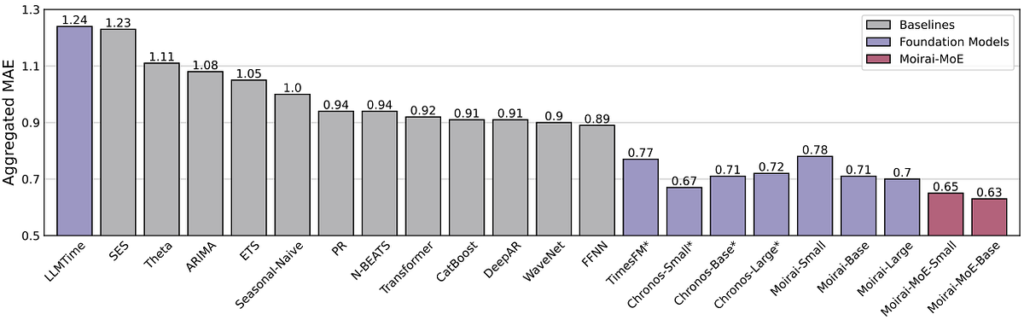

Salesforce’s MOIRAI, one of the early foundation models, achieved high benchmark results and was open-sourced along with its pretraining dataset, LOTSA.

We extensively analyzed how MOIRAI works here — and built an end-to-end project comparing MOIRAI with popular statistical models.

Salesforce has now released an upgraded version — MOIRAI-MOE — with significant improvements, particularly the addition of Mixture-of-Experts (MOE). We briefly discussed MOE when another model, Time-MOE, also used multiple experts.

In this article, we’ll cover:

- How MOIRAI-MOE works and why it’s a powerful model.

- Key differences between MOIRAI and MOIRAI-MOE.

- How MOIRAI-MOE’s use of Mixture-of-Experts enhances accuracy.

- How Mixture-of-Experts generally solves frequency variation issues in foundation time-series models.

Let’s get started.

✅ I’ve launched AI Horizon Forecast, a newsletter focusing on time-series and innovative…

[ad_2]