Lessons from agile, experimental chatbot development

[ad_1]

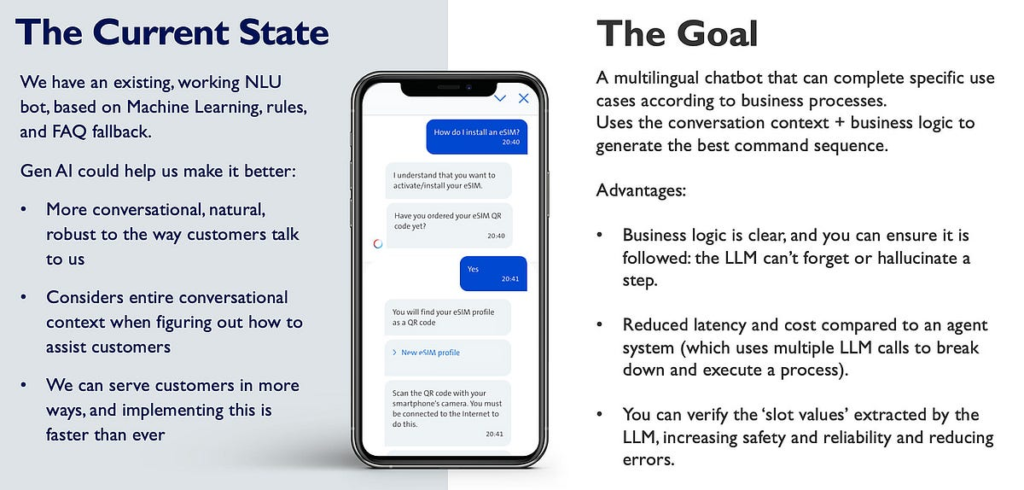

What happens when you take a working chatbot that’s already serving thousands of customers a day in four different languages, and try to deliver an even better experience using Large Language Models? Good question.

It’s well known that evaluating and comparing LLMs is tricky. Benchmark datasets can be hard to come by, and metrics such as BLEU are imperfect. But those are largely academic concerns: How are industry data teams tackling these issues when incorporating LLMs into production projects?

In my work as a Conversational AI Engineer, I’m doing exactly that. And that’s how I ended up centre-stage at a recent data science conference, giving the (optimistically titled) talk, “No baseline? No benchmarks? No biggie!” Today’s post is a recap of this, featuring:

- The challenges of evaluating an evolving, LLM-powered PoC against a working chatbot

- How we’re using different types of testing at different stages of the PoC-to-production process

- Practical pros and cons of different test types

[ad_2]